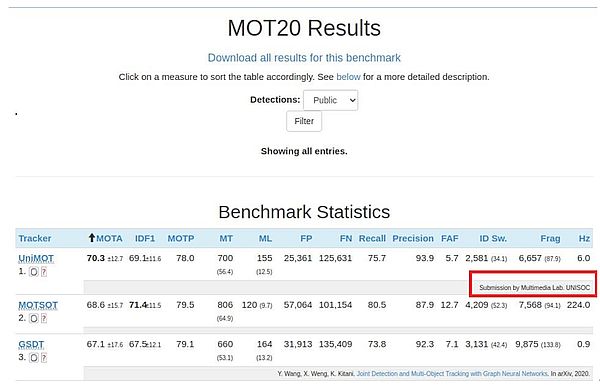

On January 19, 2021, at the internationally authoritative MOT20 Challenge (the benchmark for Multiple Object Tracking), the Multimedia Lab UNISOC algorithm outshone, exceeding 70 on the MOTA index, and wins the global championship! And its the only algorithm with more than 70 points on the MOT20 leaderboards, showing that champ UNISOC has taken the leading position in the field of multiple object tracking.

MOT Challenge, the most authoritative evaluation platform for multi-object tracking, was co-founded by Technical University of Munich, the University of Adelaide, Swiss Federal Institute of Technology Zurich and the Technical University of Darmstadt. Participants in the competition include relevant teams from the University of Oxford, Carnegie Mellon University, Tsinghua University, Technical University of Munich, Chinese Academy of Sciences, Microsoft and many other companies, universities and scientific research institutions.

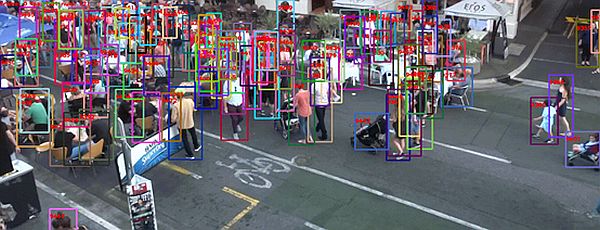

MOT20-08 Sequence Result Display

The MOT Challenge provides highly accurate annotation data and comprehensive evaluation indexes to evaluate the performance of tracking algorithms and pedestrian detectors. The MOT20 benchmark contains 8 new video sequences, all extremely challenging scenarios. This dataset was first released at the 2019 MOT Challenge Workshop, with an average of 246 pedestrians per frame. A night sequence is added, presenting a challenge to existing MOT algorithms in resolving extremely dense scenarios, algorithm generalization, etc.

UNISOC delivers innovation in its algorithm for network structure design, loss function, training data processing, and others. For scenarios not involved in the training set in the competition, UNISOC adopts end-to-end simultaneous detection and pedestrian recognition strategies to ensure real-time performance of the algorithm when it is actually implemented. The network size can be flexibly adjusted for different end-side computing power, and deployment of multiple chip solutions can be flexibly matched.

As a key technology for surveillance, vehicles, UAVs and live events, multi-object tracking technology can accurately capture the key information in the video and provide support for further information extraction. It will be widely used in the fields of smart cities, the Internet of Things, etc.

In intelligent surveillance scenarios, the algorithm can automatically identify, track, and extract the target from complex scenes, understand the active state of the target, and then realize the scene state monitoring and recognition. The application of multi-object tracking technology can greatly reduce repetitive manual work, and improve work efficiency, the intelligence and security of the monitoring system.

In live event scenarios, the algorithm can automatically extract the athletes' motion state, so as to realize the functions of data statistics, automatic broadcast and so on, mining more data value. In the smart vehicle scenarios, the algorithm can obtain the movement information of vehicles and pedestrians on the road, and provide necessary decision data support for applications such as automatic driving, safety assistance, etc.

Image algorithms are being deeply integrated into more and more vertical industries, forming multiplier effects, generating innovative businesses and applications that make people's lives better and more convenient. Visit the MOT Challenge official website at https://ift.tt/3phymio.

About UNISOC

UNISOC is a leading fabless semiconductor company committed to R&D in core chipsets for mobile communications and IoT. With 4,500 staff, 17 R&D centres, and 7 customer support centres in locations around the world, UNISOC is one of the largest chipset providers for IoT and connectivity devices in China, a global top 3 mobile chipset supplier, and the leading 5G company in the world. Please visit http://www.unisoc.com.

Media Contact

Company: UNISOC Technologies Co., Ltd

Contact: Yueying Tang, PR Team

E-mail: yueying.tang@unisoc.com

Copyright 2021 JCN Newswire. All rights reserved. www.jcnnewswire.com Via JCN Newswire https://ift.tt/2pbRN02